That’s a bit of a mouthfull as a title – but it encapsulates all the things I’m hoping to cover in this post and the end goal.

The first step is to create a point cloud using the depth data that we get from the Kinect camera. To do this we’ll need the calibration details we obtained previously (see Kinect V2 Depth Camera Calibration) and the geometry of the “Pinhole Camera”.

If you look up the Wikipedia article on the Pinhole camera model you will find that this model describes the mathematical relationship between the coordinates of a 3D point and its projection onto the image plane. We’re going to use this model to get the X and Y values in the 3D world for a given depth value (the Z co-ordinate in the 3D world); X and Y co-ordinates in the image plane; and the depth camera intriniscs we obtained previously.

This is a picture of the pinhole camera geometry:

If we look at this from just the X2 plane we see this:

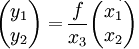

If we look at this we can see two similar triangles, both having parts of the projection line (green) as their hypotenuses. This then leads us to the following equation:

Which is an expression that describes the relation between the 3D coordinates (x_1,x_2,x_3) of point P and its image coordinates (y_1,y_2) given by point Q in the image plane. To obtain this particular equation we have rotated the coordinate system in the image plane 180° and placed the image plane so that it intersects the X3 axis at f instead of at -f (this is the equation given in the “Rotated image and the virtual image plane” section of the Wikipedia article).

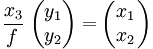

If we re-arrange this equation (using simple algebra) so that everything we know is on one side and the things we’re looking to obtain are on the other side we get:

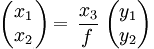

And we can re-arrange this again so that the things we’re looking to obtain are on the left-hand side of the formula and the things we know are on the right-hand side of the formula:

Everything in this equation that has an “x” is in the 3D world (x1=x, x2=y, x3=z in 3D). Everything that has a “y” in the equation is in the image view (y1=x, y2=y in the image view). The Wikipedia article seems to have gone out of its way to make things more complicated!

If you look at the original Pinhole camera model geometry you will notice that the point in the image plane is actually located at the principle point (i.e. the middle of the image) and the point in the View is located at the focal length. The co-ordinates we’ll be obtaining from the image have (0,0) in the top left-hand corner rather than in at the principle point. So we’ll need to do a conversion of our co-ordinates to get it into the correct position for our formula (we’ll simply subtract the principle point).

x_3D = (x_image - camera_principle_point_x) * z_3D / camera_focal_length_x y_3D = (y_image - camera_principle_point_y) * z_3D / camera_focal_legnth_y

In the above formula z_3D is the depth value at the given (x_image,y_image) point.

That’s all we need to generate our point cloud from our depth image. We could then go on to render that point cloud in 3D if we wanted. This is what the Depth-D3D sample did with version 1.8 of the Kinect SDK. Version 2.0 of the SDK doesn’t yet provide an updated version of this sample.

What I propose to do is calculate the normals for the 3D point cloud and then render that in 2D by mapping the (x,y,z) normal vector values to R-G-B channel values. I’ll do that in Part 2 of this article as I’ve already done a lot just to get here!

Hey Ian, great post!

I do have some questions on how we can obtain the z_3D from the raw depth values.

Also, what type of the raw depth values did you use? Does it depend on the driver?

Thanks!

I figured out. I got a unsigned 8-bit value only because I accidentally converted it so that I can save the depth raw data into a gray scale picture.

Hi Bi,

Your question has prompted me for a topic for a future post – saving depth images as 16-bit PNG files. Glad you worked out what your problem was.

Ian